|

The Bitter Religion: AI’s Holy War Over Scaling Laws

The AI community is locked in a doctrinal battle about its future and whether sufficient scale will create God.

🌟 Hey there! This is a free edition of The Generalist. To unlock our premium newsletter designed to make you a better founder, investor, and technologist, sign up below. Members get exclusive access to the strategies, tactics, and wisdom of exceptional investors and founders.

Friends,

Today’s piece is written by the brilliant Michael Dempsey. Besides being the managing partner at Compound, a thesis-driven venture firm, Michael is one of the most consistently interesting, differentiated thinkers in tech. Whenever I talk to him, I’m left with either a new rabbit hole to tumble down or an altered perspective on an industry I felt I knew well.

Because of that, I invited Michael to share his perspective in The Generalist. He did not disappoint. Today’s piece, “AI’s Holy War,” is an analysis of a fiercely contested topic in the AI community that stands to impact our lives in big and little ways. It is told with the rigor of an insider, and perspective of a keen student of history. I expect you’ll hear much more about this debate in the coming weeks and months, and Michael’s piece sets you up to understand the heart of it and what it may mean.

I hope you enjoy it as much as I did.

— Mario

👋 P.S. If you’re an investor with a differentiated, contrarian thesis or perspective you think our readership would enjoy, send a brief pitch responding to this email. We’re interested in publishing deeply-researched, wholly original pieces that translate a technological frontier, explain emerging dynamics, highlight a growing opportunity, or question accepted wisdom.

AI’s Holy War

I would rather live my life as if there is a God and die to find out there isn't, than live as if there isn't and to die to find out that there is.

– Blaise Pascal

Religion is a funny thing. It is entirely unprovable in either direction and perhaps the canonical example of a favorite phrase of mine: “You can’t bring facts to a feelings fight.”

The thing about religious beliefs is that on the way up, they accelerate at such an incredible rate that it becomes nearly impossible to doubt God. How can you doubt a divine entity when the rest of your people increasingly believe in it? What place is there for heresy when the world reorders itself around a doctrine? When temples and cathedrals, laws and norms, arrange themselves to fit a new, implacable gospel?

When the Abrahamic religions first emerged and spread across continents, or when Buddhism expanded from India throughout Asia, the sheer momentum of belief created a self-reinforcing cycle. As more people converted and built elaborate systems of theology and ritual around these beliefs, questioning the fundamental premises became progressively difficult. It is not easy to be a heretic in an ocean of credulousness. The manifestations of grand basilicae, intricate religious texts, and thriving monasteries all served as physical proof of the divine.

But the history of religion also shows us how quickly such structures can crumble. The collapse of the Old Norse creed as Christianity spread through Scandinavia happened over just a few generations. The Ancient Egyptian religious system lasted millennia, then vanished as newer, lasting beliefs took hold and grander power structures emerged. Even within religions, we’ve seen dramatic fractures – the Protestant Reformation splintered Western Christianity, while the Great Schism divided the Eastern and Western churches. These splits often began with seemingly minor disagreements about doctrine, cascading into completely separate belief systems.

The holy text

God is a metaphor for that which transcends all levels of intellectual thought. It’s as simple as that.

– Joseph Campbell

Simplistically, to believe in God is religion. Perhaps to create God is no different.

Since its inception, optimistic AI researchers have imagined their work as an act of theogenesis – the creation of a God. The last few years, defined by the explosive progression of large language models (LLMs), have only bolstered the belief among adherents that we are on a holy path.

It has also vindicated a blog post written in 2019. Though unknown to those outside of AI until recent years, Canadian computer scientist Richard Sutton’s “The Bitter Lesson” has become an increasingly important text in the community, evolving from hidden gnosis to the basis of a new, encompassing religion.

In 1,113 words (every religion needs sacred numbers), Sutton outlines a technical observation: “The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin.” AI models improve because computation becomes exponentially more available, surfing the great wave of Moore’s Law. Meanwhile, Sutton remarks that much of AI research focuses on optimizing performance through specialized techniques – adding human knowledge or narrow tooling. Though these optimizations may help in the short term, they are ultimately a waste of time and resources in Sutton’s view, akin to fiddling with the fins on your surfboard or trying out a new wax as a terrific surge gathers.

This is the basis of what we might call “The Bitter Religion.” It has one and only one commandment, usually referred to in the community as the “scaling laws”: exponentially growing computation drives performance; the rest is folly.

The Bitter Religion has spread from LLMs to world models and is now proliferating through the unconverted bethels of biology, chemistry, and embodied intelligence (robotics and AVs). (I covered this progression in-depth in this post.)

However as Sutton’s doctrine has spread, the definitions have begun to morph. This is the sign of all living and lively religions – the quibbling, the stretching, the exegesis. “Scaling laws” no longer means just scaling computation (the Ark is not just a boat) but refers to various approaches designed to improve the performance of transformers and compute, with a few tricks along for the ride.

The canon now encapsulates attempts to optimize every part of the AI stack, ranging from tricks applied to the core models themselves (merged models, mixture of experts (MoE), and knowledge distillation) all the way to generating synthetic data to feed these ever-hungry Gods, with a lot of experimentation in-between.

The warring sects

The question roiling through the AI community recently with the tenor of a holy war is whether The Bitter Religion is still true.

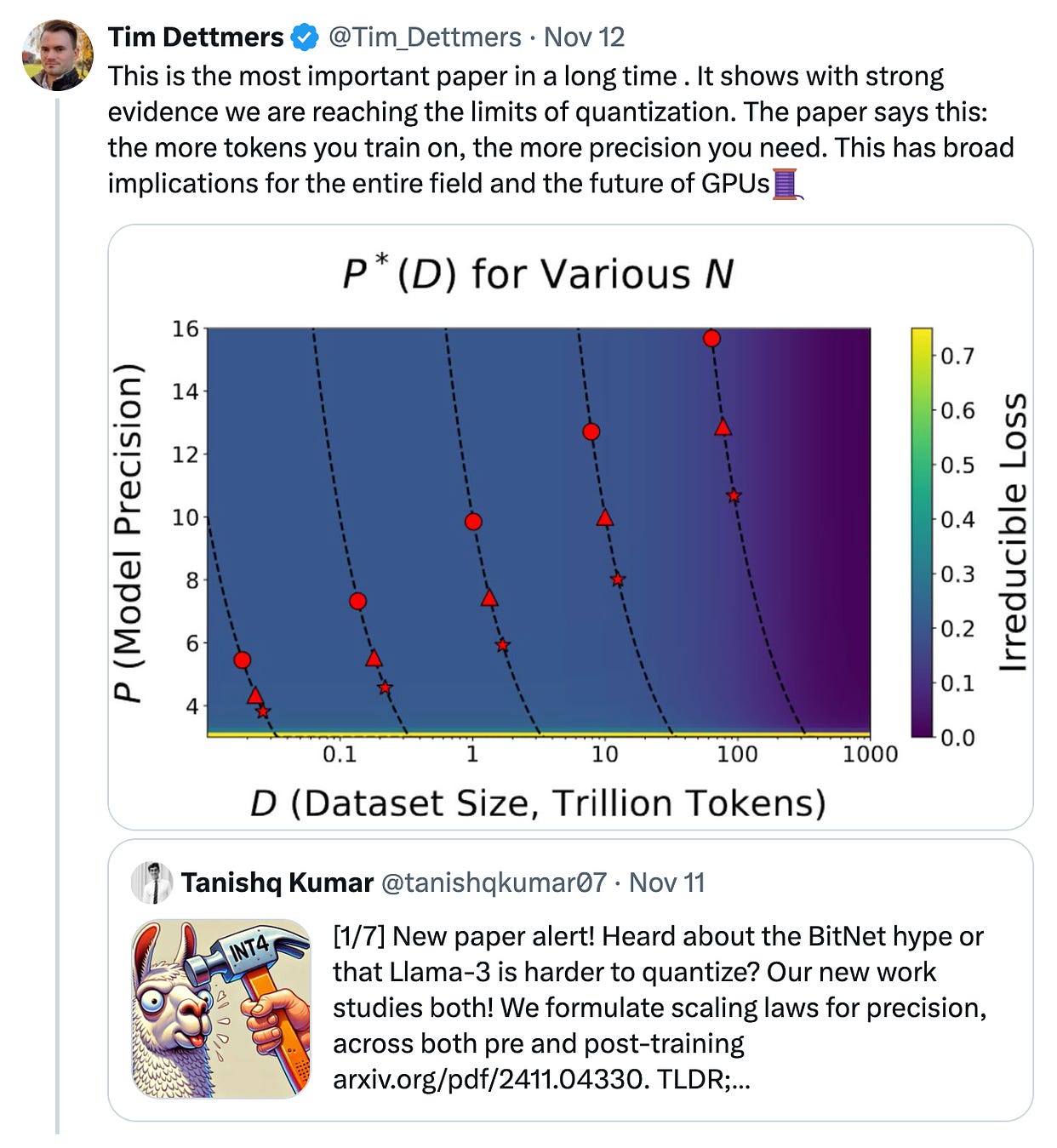

A new paper out of Harvard, Stanford, and MIT titled “Scaling Laws for Precision,” stoked the conflict this week. The paper discussed the end of efficiency gains via quantization, a range of techniques that have improved the performance of AI models and have been of great use to the open-source ecosystem. Tim Dettmers, a research scientist at the Allen Institution for Artificial Intelligence, outlined its significance in the thread below, calling it “the most important paper in a long time.” It represents the continuation of a conversation that has bubbled for the past few weeks and reveals a notable trendline: the growing solidification of two religions.

OpenAI CEO Sam Altman and Anthropic CEO Dario Amodei stand in one sect. Both have stated with a level of confidence, marketing, and perhaps trolling that we will have Artificial General Intelligence (AGI) in approximately the next 2-3 years. Both Altman and Amodei are arguably most reliant on the divinity of The Bitter Religion. All of their incentives are to overpromise and create maximum hype to accumulate capital in a game that is quite literally dominated by economies of scale. If scaling is not the Alpha and the Omega, the First and the Last, the Beginning and the End, then what do you need 22 billion dollars for?

Former OpenAI Chief Scientist Ilya Sutskever adheres to a different set of tenets. He is joined by other researchers (including many from within OpenAI, per recent leaks) who believe that scaling is approaching a ceiling. This group believes that novel science and research will be required to maintain progress and bring AGI to the real world.

There’s much more in the rest of the piece, including a discussion of where the next wave of great AI companies could emerge, and what they will look like. Keep reading by clicking the button above.

You're currently a free subscriber to The Generalist. For the full experience, upgrade your subscription.